Iceberg Catalog

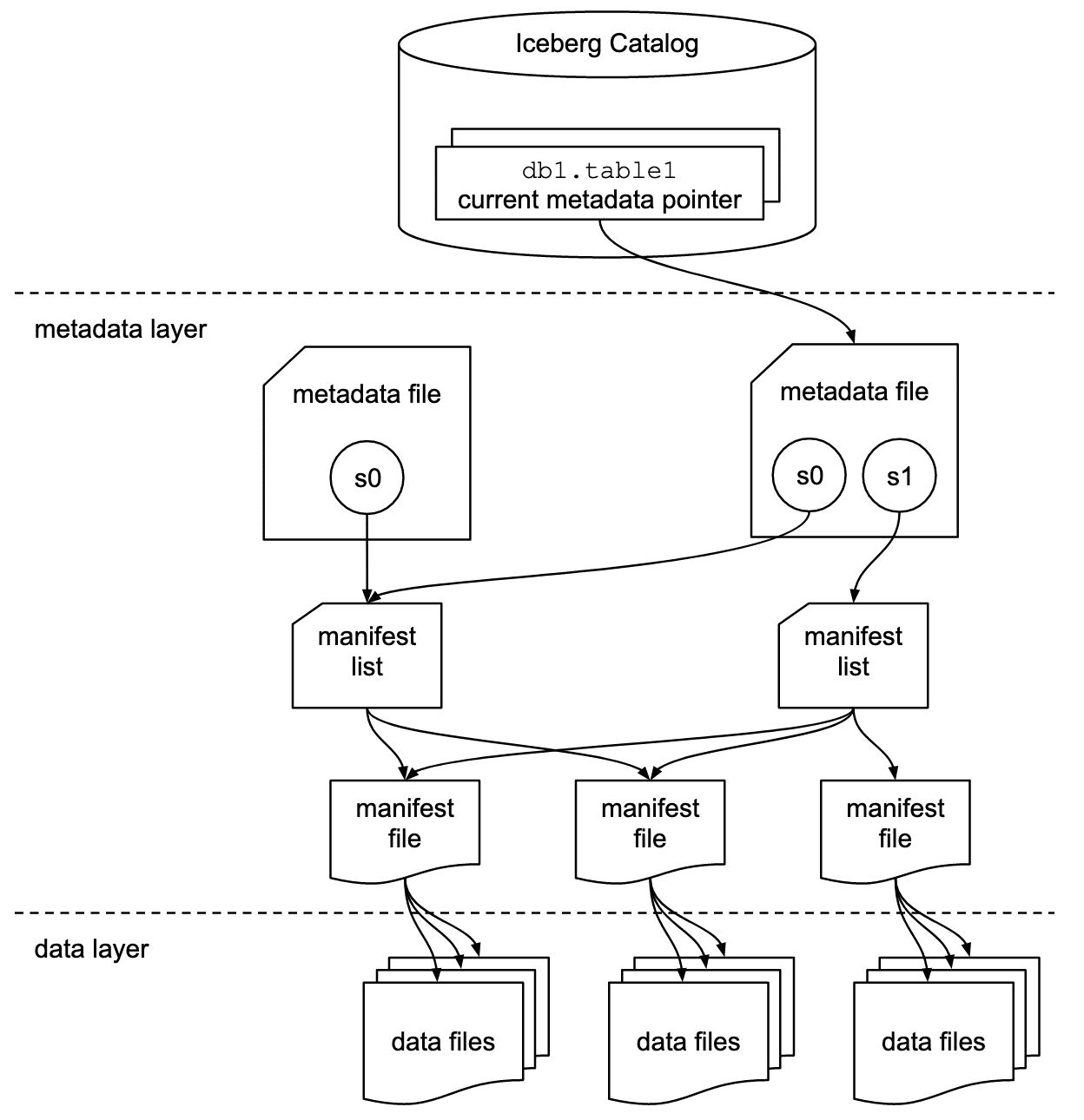

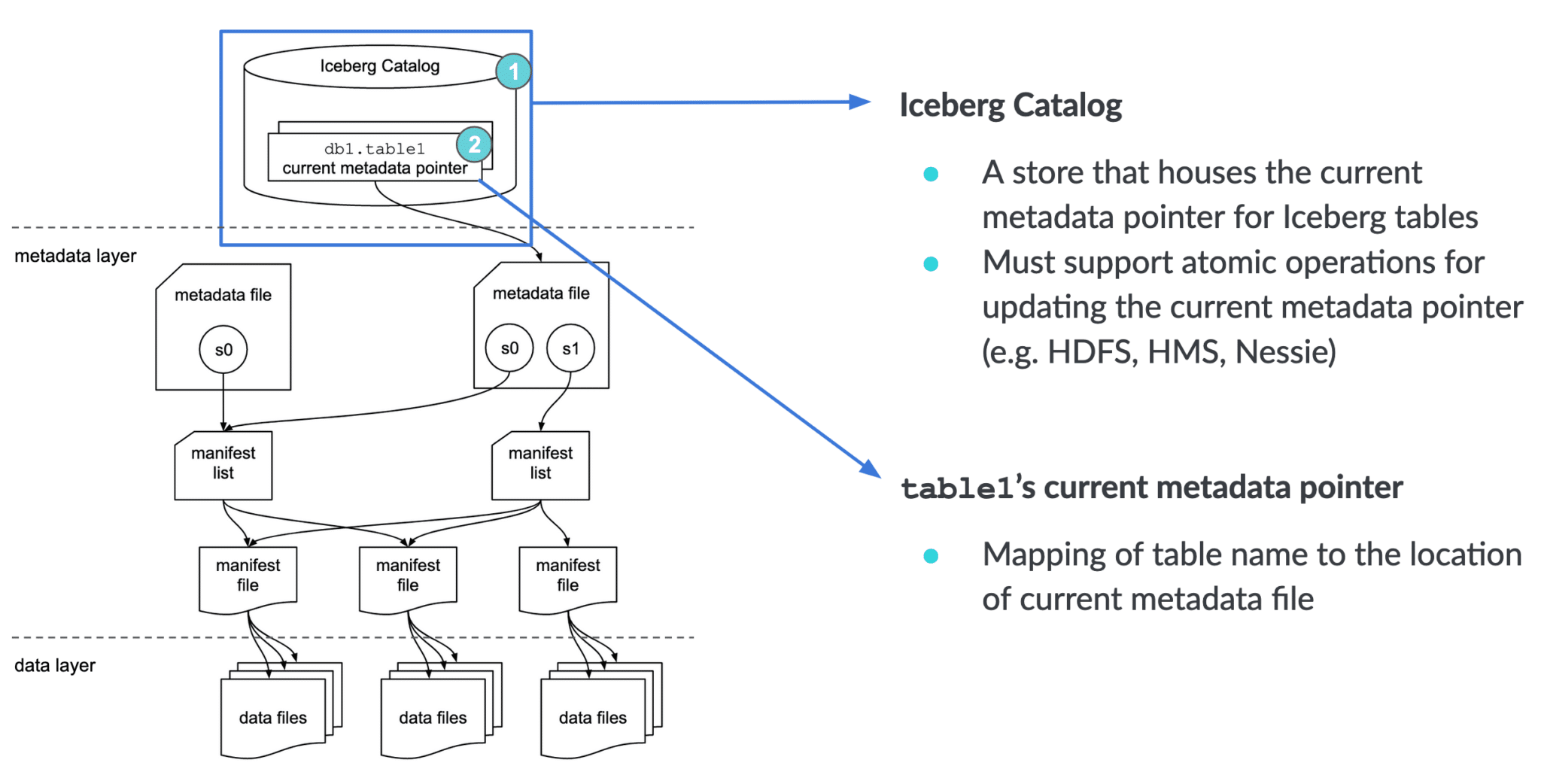

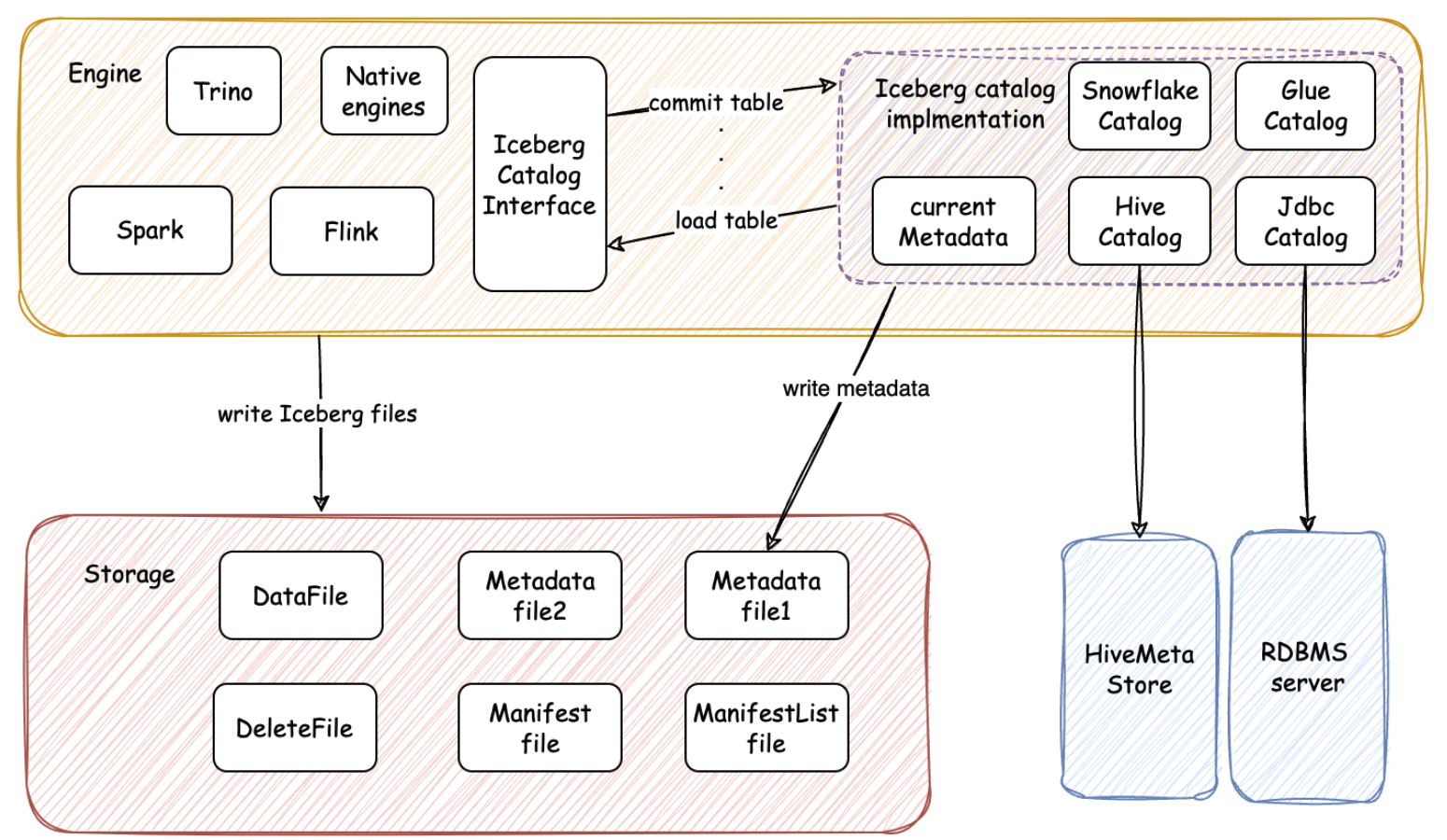

Iceberg Catalog - An iceberg catalog is a metastore used to manage and track changes to a collection of iceberg tables. Iceberg catalogs are flexible and can be implemented using almost any backend system. The catalog table apis accept a table identifier, which is fully classified table name. Metadata tables, like history and snapshots, can use the iceberg table name as a namespace. Read on to learn more. Directly query data stored in iceberg without the need to manually create tables. The apache iceberg data catalog serves as the central repository for managing metadata related to iceberg tables. Iceberg brings the reliability and simplicity of sql tables to big data, while making it possible for engines like spark, trino, flink, presto, hive and impala to safely work with the same tables, at the same time. In spark 3, tables use identifiers that include a catalog name. Its primary function involves tracking and atomically. Iceberg catalogs are flexible and can be implemented using almost any backend system. Clients use a standard rest api interface to communicate with the catalog and to create, update and delete tables. To use iceberg in spark, first configure spark catalogs. Discover what an iceberg catalog is, its role, different types, challenges, and how to choose and configure the right catalog. In iceberg, the catalog serves as a crucial component for discovering and managing iceberg tables, as detailed in our overview here. Directly query data stored in iceberg without the need to manually create tables. An iceberg catalog is a metastore used to manage and track changes to a collection of iceberg tables. An iceberg catalog is a type of external catalog that is supported by starrocks from v2.4 onwards. Iceberg brings the reliability and simplicity of sql tables to big data, while making it possible for engines like spark, trino, flink, presto, hive and impala to safely work with the same tables, at the same time. Metadata tables, like history and snapshots, can use the iceberg table name as a namespace. Metadata tables, like history and snapshots, can use the iceberg table name as a namespace. Read on to learn more. An iceberg catalog is a metastore used to manage and track changes to a collection of iceberg tables. Discover what an iceberg catalog is, its role, different types, challenges, and how to choose and configure the right catalog. An iceberg. They can be plugged into any iceberg runtime, and allow any processing engine that supports iceberg to load. The catalog table apis accept a table identifier, which is fully classified table name. Iceberg uses apache spark's datasourcev2 api for data source and catalog implementations. With iceberg catalogs, you can: The apache iceberg data catalog serves as the central repository for. They can be plugged into any iceberg runtime, and allow any processing engine that supports iceberg to load. Its primary function involves tracking and atomically. The catalog table apis accept a table identifier, which is fully classified table name. Clients use a standard rest api interface to communicate with the catalog and to create, update and delete tables. Iceberg uses. Iceberg catalogs can use any backend store like. It helps track table names, schemas, and historical. In spark 3, tables use identifiers that include a catalog name. The apache iceberg data catalog serves as the central repository for managing metadata related to iceberg tables. Metadata tables, like history and snapshots, can use the iceberg table name as a namespace. Clients use a standard rest api interface to communicate with the catalog and to create, update and delete tables. Iceberg catalogs can use any backend store like. They can be plugged into any iceberg runtime, and allow any processing engine that supports iceberg to load. Metadata tables, like history and snapshots, can use the iceberg table name as a namespace.. Metadata tables, like history and snapshots, can use the iceberg table name as a namespace. Its primary function involves tracking and atomically. The catalog table apis accept a table identifier, which is fully classified table name. Directly query data stored in iceberg without the need to manually create tables. An iceberg catalog is a type of external catalog that is. The catalog table apis accept a table identifier, which is fully classified table name. Iceberg brings the reliability and simplicity of sql tables to big data, while making it possible for engines like spark, trino, flink, presto, hive and impala to safely work with the same tables, at the same time. In spark 3, tables use identifiers that include a. An iceberg catalog is a type of external catalog that is supported by starrocks from v2.4 onwards. Discover what an iceberg catalog is, its role, different types, challenges, and how to choose and configure the right catalog. With iceberg catalogs, you can: In iceberg, the catalog serves as a crucial component for discovering and managing iceberg tables, as detailed in. Clients use a standard rest api interface to communicate with the catalog and to create, update and delete tables. Iceberg catalogs are flexible and can be implemented using almost any backend system. Iceberg uses apache spark's datasourcev2 api for data source and catalog implementations. Metadata tables, like history and snapshots, can use the iceberg table name as a namespace. The. In iceberg, the catalog serves as a crucial component for discovering and managing iceberg tables, as detailed in our overview here. An iceberg catalog is a metastore used to manage and track changes to a collection of iceberg tables. Metadata tables, like history and snapshots, can use the iceberg table name as a namespace. It helps track table names, schemas,. Iceberg uses apache spark's datasourcev2 api for data source and catalog implementations. The catalog table apis accept a table identifier, which is fully classified table name. An iceberg catalog is a metastore used to manage and track changes to a collection of iceberg tables. The apache iceberg data catalog serves as the central repository for managing metadata related to iceberg tables. With iceberg catalogs, you can: They can be plugged into any iceberg runtime, and allow any processing engine that supports iceberg to load. Metadata tables, like history and snapshots, can use the iceberg table name as a namespace. To use iceberg in spark, first configure spark catalogs. An iceberg catalog is a type of external catalog that is supported by starrocks from v2.4 onwards. Iceberg catalogs can use any backend store like. It helps track table names, schemas, and historical. In spark 3, tables use identifiers that include a catalog name. Directly query data stored in iceberg without the need to manually create tables. Iceberg catalogs are flexible and can be implemented using almost any backend system. In iceberg, the catalog serves as a crucial component for discovering and managing iceberg tables, as detailed in our overview here. Iceberg brings the reliability and simplicity of sql tables to big data, while making it possible for engines like spark, trino, flink, presto, hive and impala to safely work with the same tables, at the same time.Flink + Iceberg + 对象存储,构建数据湖方案

GitHub spancer/icebergrestcatalog Apache iceberg rest catalog, a

Introducing Polaris Catalog An Open Source Catalog for Apache Iceberg

Apache Iceberg Frequently Asked Questions

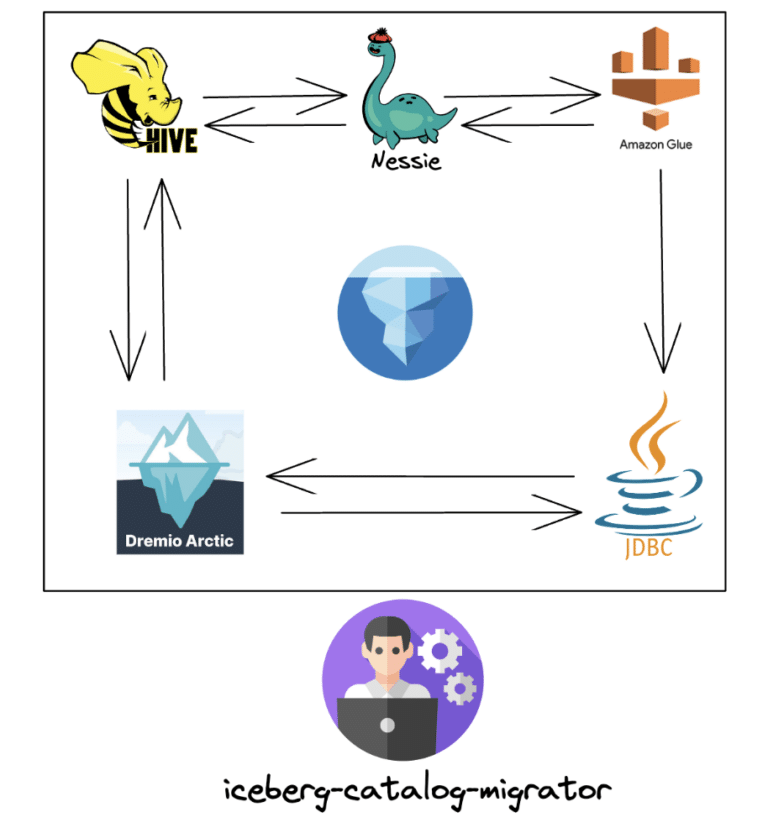

Introducing the Apache Iceberg Catalog Migration Tool Dremio

Introducing the Apache Iceberg Catalog Migration Tool Dremio

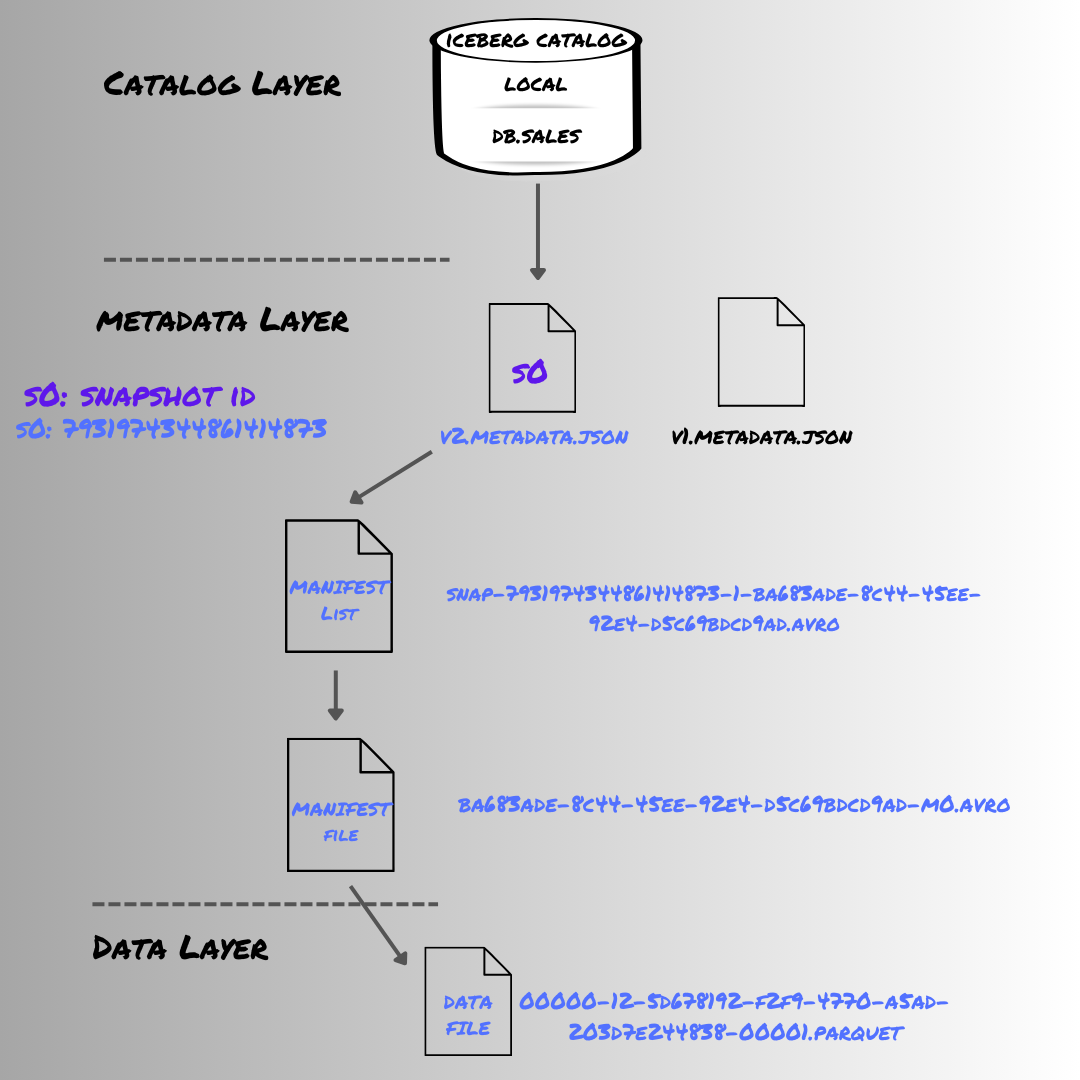

Apache Iceberg An Architectural Look Under the Covers

Gravitino NextGen REST Catalog for Iceberg, and Why You Need It

Apache Iceberg Architecture Demystified

Understanding the Polaris Iceberg Catalog and Its Architecture

Read On To Learn More.

Discover What An Iceberg Catalog Is, Its Role, Different Types, Challenges, And How To Choose And Configure The Right Catalog.

Clients Use A Standard Rest Api Interface To Communicate With The Catalog And To Create, Update And Delete Tables.

Its Primary Function Involves Tracking And Atomically.

Related Post: